MBAs do not unexpectedly acquire new skills as they develop. You just need to know how to measure its performance correctly.

Researchers believe that large language models (LLMs) can have unexpected improvements. This then posed a serious problem for the development of artificial intelligence. A recent study has demolished this hypothesis. Proper monitoring makes it possible to know when Master's Acquire skills.

Let us remember that Major language models Essential for the development of artificial intelligence. The famous generative chat software OpenAI, ChatGPT,depends on LLMs such as GPT-4 or GPT-4 Turbo.

The BIG-Bench, LLMs and their skills

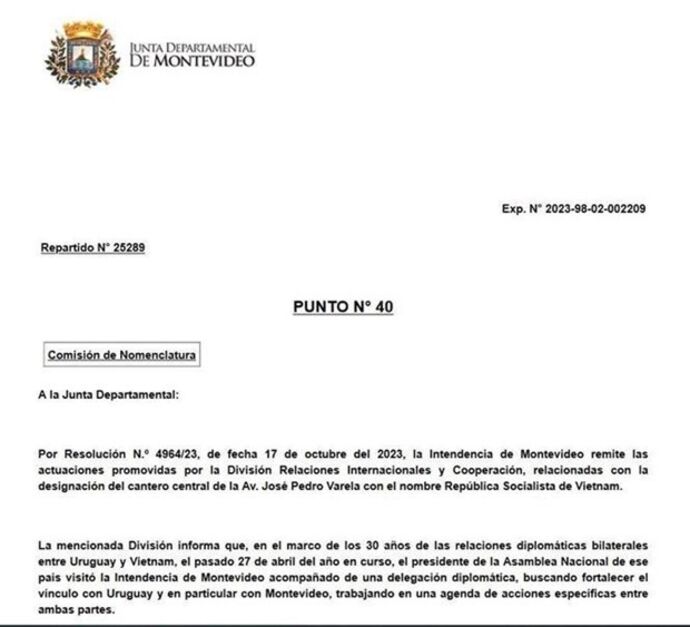

It's 2022. More than 400 researchers They are launching a major project to test large language models. nickname Big seatThis consists of passing to the LLM A series of 204 tasks. For most tasks, performance increases regularly depending on the size of the model. Occasionally, researchers have observed A Performance leap After the latency period.

The authors call this behavior “penetration,” similar to A Transitional phase in physics. It also highlights the unpredictable nature of this behavior. Then this triggers an unexpected development Questions in terms of security. Indeed, a Generative artificial intelligence Unpredictability can be dangerous.

The bench's conclusions have been widely questioned

This week, three researchers from Stanford University published A paper detailing their work on BIG-Bench inferences. This new research suggests that the sudden emergence of skills is just that As a result of how researchers measure LLM performance.

Skills are not Neither unexpected nor surprisingThis is supported by a trio of scholars. ” The shift is more predictable “, he declared Sanmi Koijo. Note that this computer scientist at Stanford University is the lead author of the article.

Furthermore, LLM students practice by analyzing large amounts of texts. They identify connections between words. The more parameters, the more connections the model can find. We mention that GPT-2 Owns 1.5 billion parameterswhile GPT-3.5 in 350 billion. GPT-4, which works Microsoft Copilotuser 1.75 trillion parameters.

The importance of the method of evaluating LLMs and their skills

The rapid growth of large language models has led to an impressive improvement in their performance. The Stanford trio realizes that these LLMs have become More effective as it develops. However, the improvement depends on Choose the scale for evaluationRather than the inner workings of the model.

According to BIG-Bench, GPT-3 From OpenAI and Lambda From Google showed a Surprising ability to solve addition problems with more parameters. However, this “Appearance of“Depends on the metric used according to the new study. Giving partial credit to the metric, there appears to be an improvement.” Gradual and predictable.

In short, this development in the idea of emergence is not an easy matter. It will certainly encourage researchers to develop a The science of predicting the behavior of large language models.

Our blog is supported by our readers. When you buy through links on our site, we may earn an affiliate commission.

“Hardcore beer fanatic. Falls down a lot. Professional coffee fan. Music ninja.”

More Stories

Two ways to fix the bug that prevents you from sending videos

Google has registered a trademark on the name of the AI-generating camera in its next smartphone

Opening concert of the Lanaudiere Festival: Farah Alipay will take us on a journey into space