With its AutoDMP (Automated DREAMPlace-Based Macro Placement) tool, NVIDIA, like Synopsis, uses artificial intelligence to design chips. The result is faster and just as qualitative as with the older methods, according to the company.

Recently, Michael Kagan, CTO of NVIDIA, explained that artificial intelligence has a bright future in addition to benefiting humanity – unlike cryptocurrencies, to contextualize words. When it comes to chip design, Synopsis has already shown how to use its DSO.ai tool (AI design space optimization) Help designers, reduce “engineering resources” thanks to the ability of algorithms to explore many different designs in record time, and it has already led to 100 commercial achievements. In an article published earlier this week, by Anthony Agnesina and Mark Ren (members of NVIDIA Research), they revealed a similar approach to AutoDMP (Location-based DREAM automated macro mode) of the company they work for.

The authors explain how artificial intelligence and GPU-accelerated machine learning are changing the chip design game within an electronic design automation (EDA) system. According to them, these technologies make it possible to “accelerate the positioning process by more than 30 times.”

To read> the positions of several people who are in a specific room using Wi-Fi

Faster design and equal quality

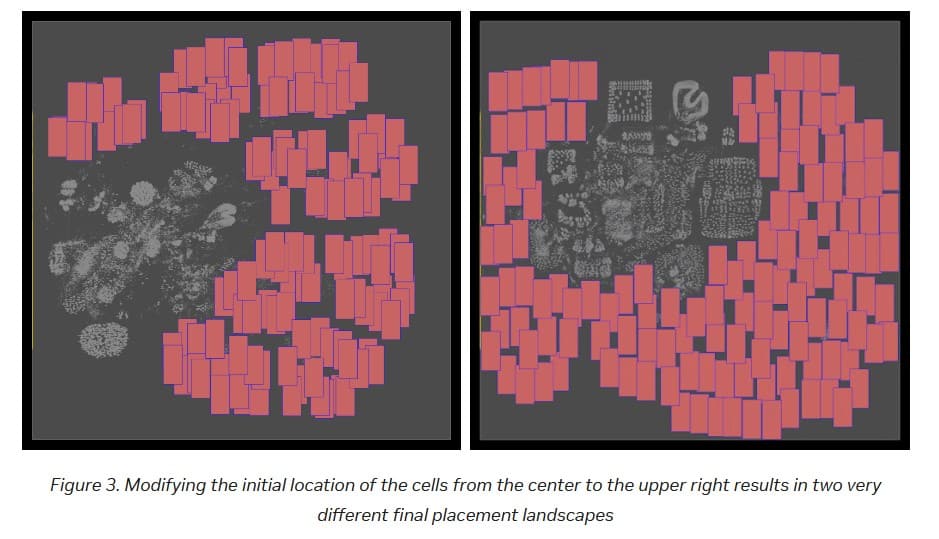

As you can imagine, what the authors call overall placement has a significant impact, because it “directly affects many design parameters, such as space and energy consumption.” Anthony Agnesina and Marc Rehn explain that the open source DREAMPlace Analysis Placement is used as a “placement engine”. Concretely, he “formulates the placement problem in the form of a wire length optimization problem under the constraints of placement density and solves it numerically”; Specifically, “DREAMPlace digitally calculates wire lengths and intensity gradients using GPU-accelerated algorithms supported by the PyTorch framework.” The goal, of course, is to speed up and optimize the tedious process of finding optimal positions for processor components.

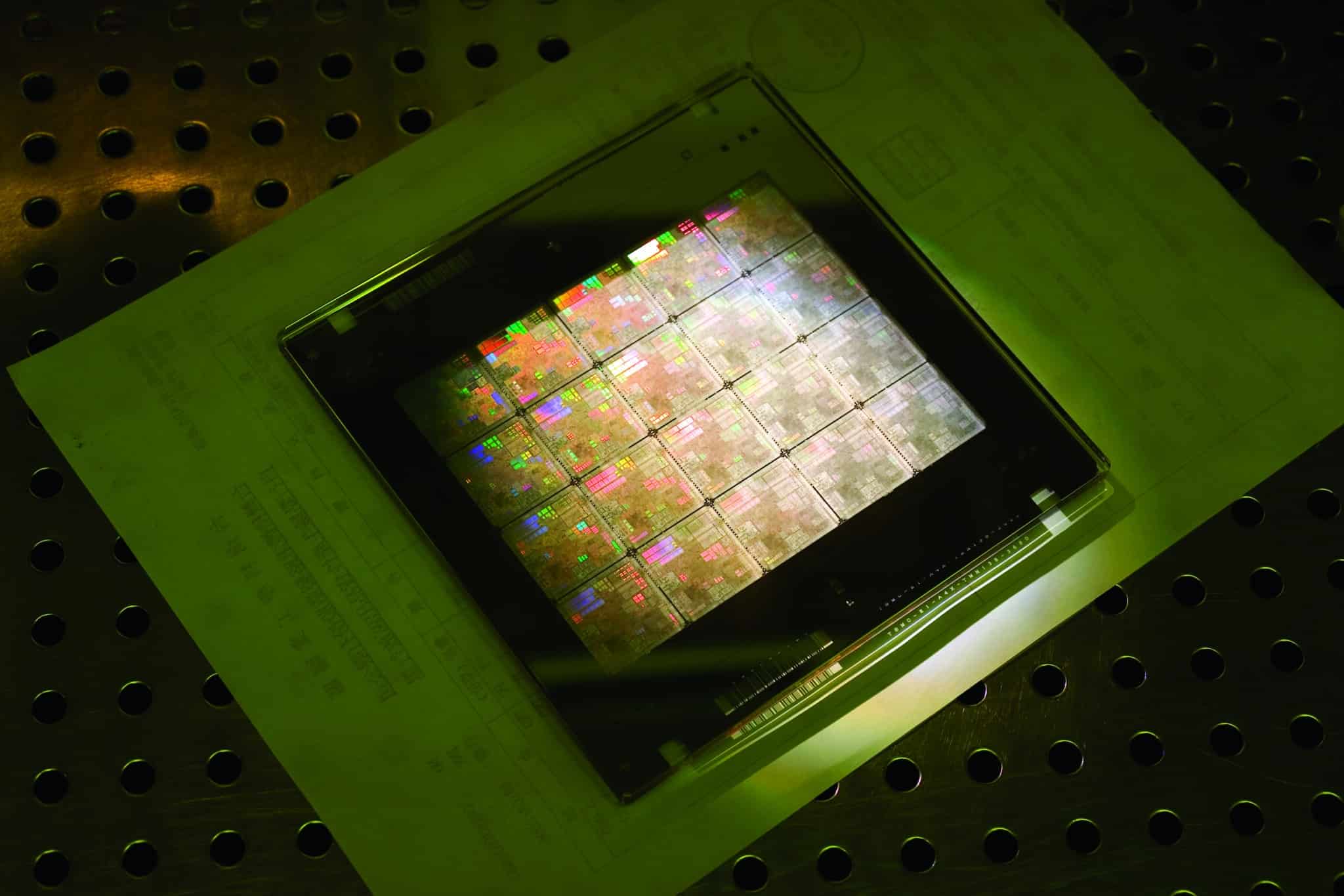

NVIDIA gives an example of finding the optimal layout for a 256-core RISC-V CPU, which is 2.7 million standard cells and 320 memory macros. It takes AutoDMP just 3.5 hours to find the optimal layout on a single NVIDIA DGX A100 workstation.

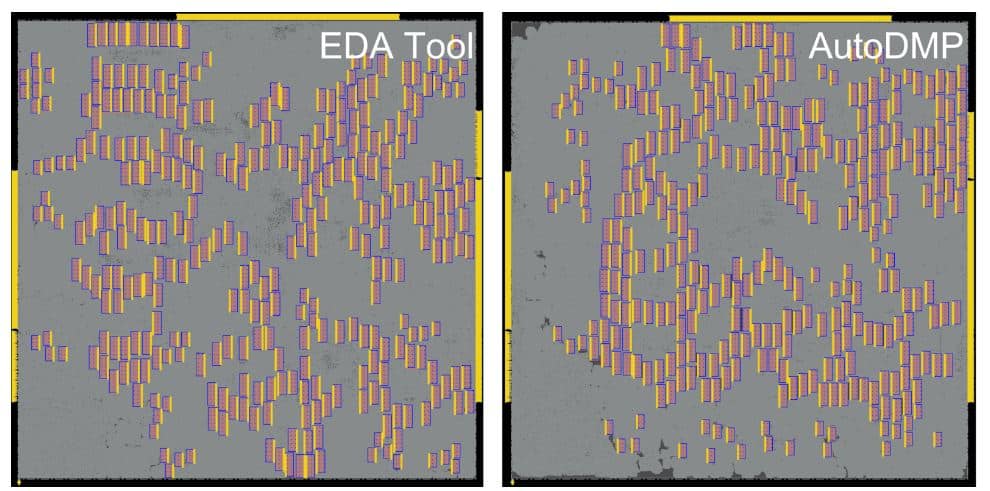

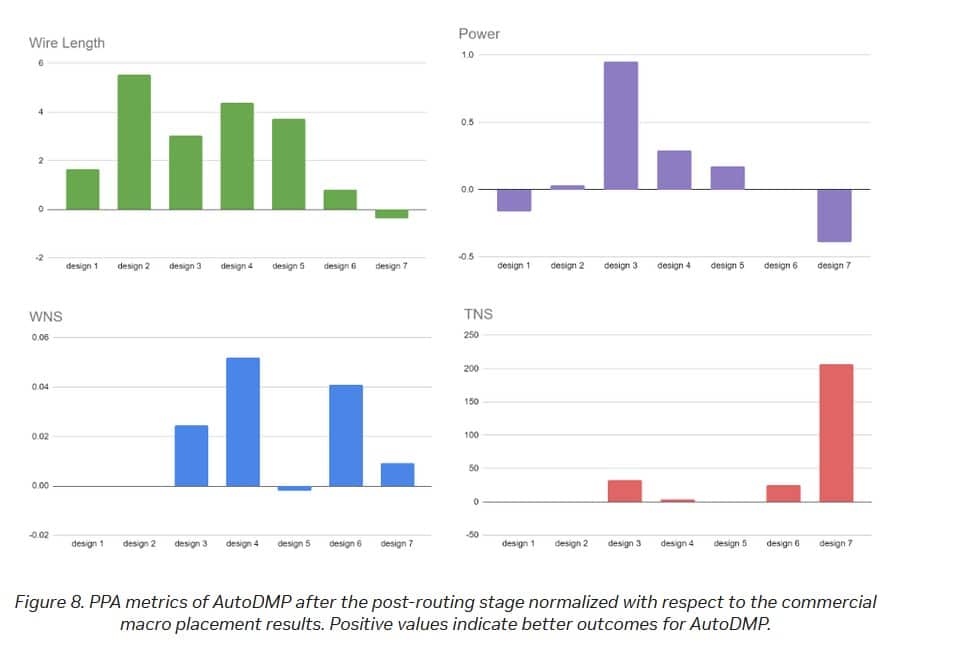

The benefits of using AutoDMP are not limited to design speed according to the authors; The gains are also qualitative. They gave an example of seven alternative designs made for a test slide in which “most of the designs […] equal to or greater than that generated by the trade flow.”

cuLitho, NVIDIA’s new software library for computational lithography

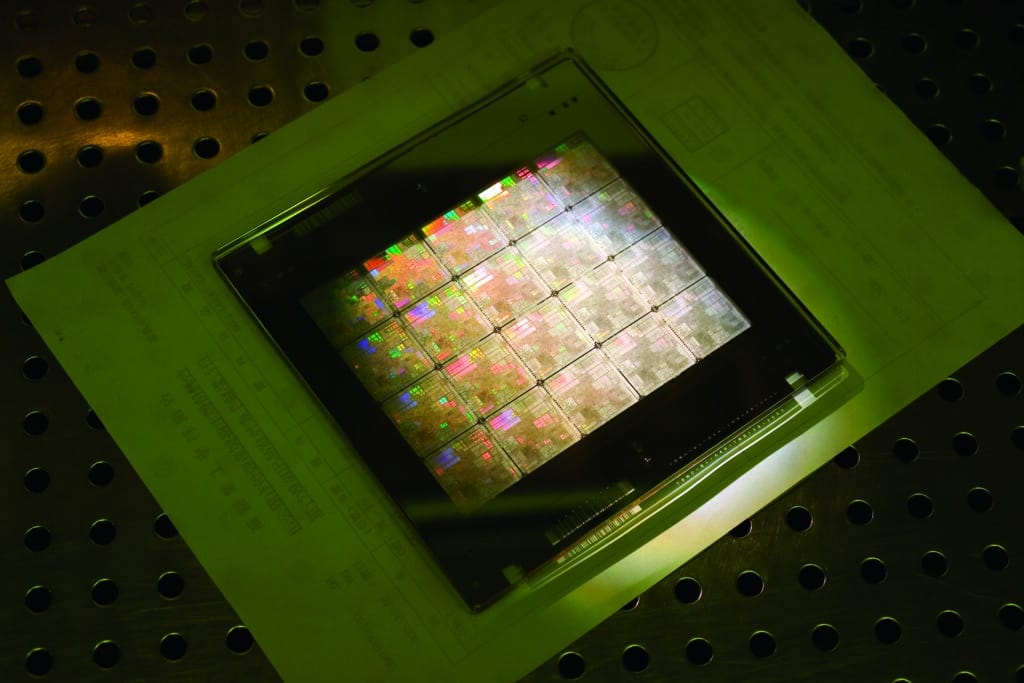

In another record, but still related to chip design, know that at GTC 2023 NVIDIA introduced its new cuLitho software library, which is supposed to speed up computational lithography.

NVIDIA says its new approach allows 500 DGX H100 systems with 4,000 GPU hoppers to do the same amount of work as 40,000 CPUs (models not specified), but do it 40 times faster and use 9 times less power. This reduction in workload will reduce the time required to develop a photographic mask from several weeks to eight hours.

NVIDIA has stated that its cuLitho software library is being integrated by TSMC, in addition to Synopsys. Equipment manufacturer ASML also plans to use it.

sources: NVIDIA (AutoDMP)And Nvidia (Koletho)

“Hardcore beer fanatic. Falls down a lot. Professional coffee fan. Music ninja.”

More Stories

Financial assistance to establish a rain garden

Artificial Intelligence is coming to WhatsApp – here's everything you can do with it

What is the first tool you use to search for information on the Internet?